|

|

Publications [conut: 25]

Further publication form the MADM Research Group can be found at our pubs repository.

Automatic Concept-to-Query Mapping for Web-based Concept Detector Training

Damian Borth, Adrian Ulges, Thomas Breuel, to appear at ACM Multimedia, 2011 Nowadays online platforms like YouTube provide massive content for training of visual concept detectors. However, it remains a difficult challenge to retrieve the right training content from such platforms. In this paper we present an approach offering an automatic concept-to-query mapping for training data acquisition. Queries are automatically constructed by a keyword term selection and a category assignment through external source like ImageNet and Google Sets. Our results demonstrate that the proposed method is able to reach retrieval results comparable to queries constructed by humans providing 76% more relevant content for detector training than an one-to-one mapping of concept names to retrieval queries would do. Smart Video Buddy - Content-based Live Recommendation

Damian Borth, Christian Kofler, Adrian Ulges, IEEE ICME, 2011 In this technical demonstration we present Smart Video Buddy, a content-based live recommender that links video streams with related information. The system performs a real-time analysis of video content by feeding a scenes' color and local patches to statistical learners, which detect semantic concepts in the video stream. Based on the provided recognition results, recommendations for news, products and links are made and automatically adapted to what is being watched in real-time. This way, passive video consumption is turned into an interactive experience. Learning Visual Contexts for Image Annotation from Flickr Groups

Adrian Ulges, Marcel Worring, and Thomas Breuel, IEEE Transactions on Multimedia, 2011 We present an extension of automatic image annotation that takes the context of a picture into account. Our core assumption is that users do not only provide individual images to be tagged, but group their pictures into batches (e.g., all snapshots taken over the same holiday trip), whereas the images within a batch are likely to have a common style. These batches are matched with categories learned from Flickr groups, and an accurate context-specific annotation is performed. In quantitative experiments, we demonstrate that Flickr groups, with their user-driven categorization and their rich group space, provide an excellent basis for learning context categories. Our approach - which can be integrated with virtually any annotation model - is demonstrated to give significant improvements of above 100%, compared to standard annotations of individual images. Lookapp - Interactive Construction of Web-based Concept Detectors

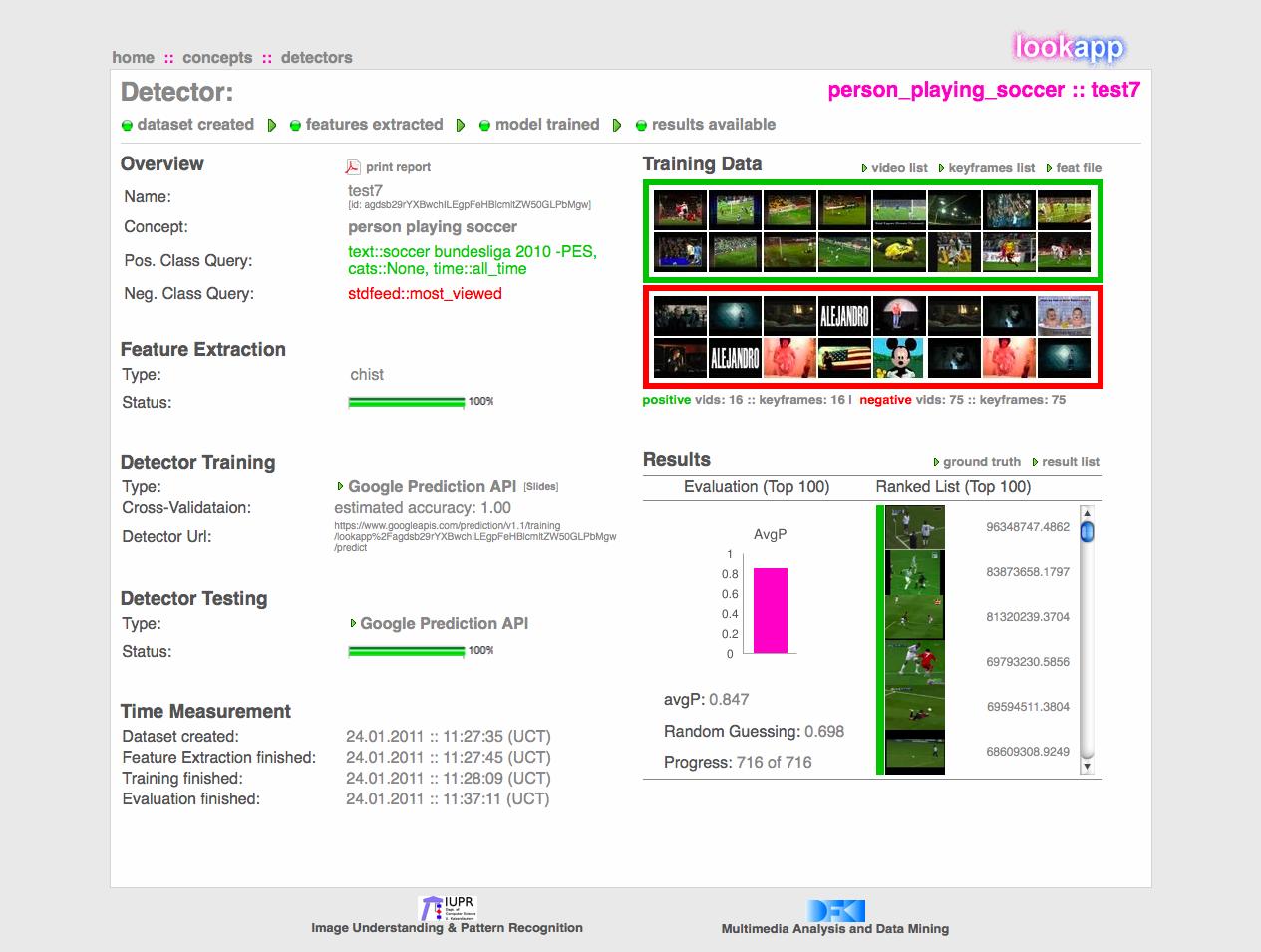

Damian Borth, Adrian Ulges, Thomas Breuel, ACM ICMR, 2011 While online platforms like YouTube and Flickr do provide massive content for training of visual concept detectors, it remains a difficult challenge to retrieve the right training content from such platforms. In this technical demonstration we present lookapp, a system for the interactive construction of web-based concept detectors. It major features are an interactive Ņconcept-to-queryÓ mapping for training data acquisition and an efficient detector construction based on third party cloud computing services. DFKI and Uni. of Kaiserslautern Participation at TRECVID 2010 - Semantic Indexing Task

Damian Borth, Adrian Ulges, Markus Koch, Thomas Breuel, TRECVID Workshop, 2010 This paper describes the TRECVID 2010 participation of the DFKI-MADM team in the semantic indexing task. This years participation was dominated by two aspects, a new dataset and a large-sized vocabulary of 130 concepts. For the annual TRECVID benchmark this means to scale label annotation efforts to signiŽcant larger concept vocabularies and datasets. Aiming to reduce this effort, our intention is to automatically acquire training data from online video portals like YouTube and to use tags, associated with each video, as concept labels. Results for the evaluated subset of concepts show similarly to last year's participation, that effects like label noise and domain change lead to a performance loss (infMAP 2.1% and 1.3%) as compared to purely TRECVID trained concept detectors (infMAP 5.0% and 4.4%). Nevertheless, for individual concepts like "demonstration or protest" or "bus" automatic learning from online video portals is a valid alternative to expected labeled training datasets. Furthermore, the results also indicate that fusion of multiple features helps to improves detection precision. Can Motion Segmentation Improve Patch-based Object Recognition?

Adrian Ulges, Thomas Breuel, ICPR, 2010 Patch-based methods, which constitute the state of the art in object recognition, are often applied to video data, where motion information provides a valuable clue for separating objects of interest from the background. We show that such motion-based segmentation improves the robustness of patch-based recognition with respect to clutter. Our approach - which employs segmentation information to rule out incorrect correspondences between training and test views - is demonstrated to distinctly outperform baselines operating on unsegmented images. Relative improvements reach 50% for the recognition of specific objects, and 33% for object category retrieval. Adapting Web-based Video Concept Detectors for Different Target Domains

Damian Borth, Adrian Ulges, Thomas Breuel, to appear in the Internet Multimedia Search and Mining (IMSM) book by Bentham Science Publishers, 2010 In this chapter, we address the visual learning of automatic concept detectors from web video as available from services like YouTube. While allowing a much more efficient, ßexible, and scalable concept learning compared to expert labels, web-based detectors perform poorly when applied to different domains (such as speciŽc TV channels). We address this domain change problem using a novel approach, which Š after an initial training on web content Š performs a highly efficient online adaptation on the target domain. In quantitative experiments on data from YouTube and from the TRECVID campaign, we Žrst validate that domain change appears to be the key problem for web-based concept learning, with much more signiŽcant impact than other phenomena like label noise. Second, the proposed adaptation is shown to improve the accuracy of web-based detectors signiŽcantly, even over SVMs trained on the target domain. Finally, we extend our approach with active learning such that adaptation can be interleaved with manual annotation for an efficient exploration of novel domains. Relevance Filtering meets Active Learning: Improving Web-based Concept Detectors

Damian Borth, Adrian Ulges, Thomas Breuel, ACM MIR 2010 We address the challenge of training visual concept detectors on web video as available from portals such as YouTube. In contrast to high-quality but small manually acquired training sets, this setup permits us to scale up concept detection to very large training sets and concept vocabularies. On the downside, web tags are only weak indicators of concept presence, and web video training data contains lots of non- relevant content. So far, there are two general strategies to overcome this label noise problem, both targeted at discarding non-relevant training content: (1) a manual refinement supported by active learning sample selection, (2) an automatic refinement using relevance filtering. In this paper, we present a highly efficient approach combining these two strategies in an interleaved setup: manually refined samples are directly used to improve relevance filtering, which again provides a good basis for the next active learning sample selection. Our results demonstrate that the proposed combination - called active relevance filtering - outperforms both a purely automatic filtering and a manual one based on active learning. For example, by using 50 manual labels per concept, an improvement of 5% over an automatic filtering is achieved, and 6% over active learning. By labeling only 25% of the weak positive labels in the training set, a performance comparable to training on ground truth labels is reached. Topic Models for Semantics-preserving Video Compression

Jörn Wanke, Adrian Ulges, Christoph Lampert, Thomas Breuel, ACM MIR 2010 Content-based video understanding tasks such as autoannotation or clustering are based on low-level descriptors of video content, which should be compact in order to optimize storage requirements and efficiency. In this paper, we address the semantic compression of video, i.e. the reduction of low-level descriptors to a few semantically expressive dimensions. To achieve this, topic models have been proposed, which cluster visual content into a low number of latent aspects and have successfully been applied to still images before. In this paper, we investigate topic models for the video domain, addressing several key questions that have been unanswered so far: (1) data: - first, we confirm the good performance of topic models for concept detection on web video data, showing that a performance comparable to bag-of-visual-words descriptors can be reached at a compression rate of 1/20. (2) diversity: -we demonstrate that topic models perform best when trained on large-scale, diverse datasets, i.e. no tedious manual pre-selection is required. (3) multi-modal integration:- we show how topic models can benefit from an integration of multi-modal features, like motion and patches, and finally (4) temporal structure: -by extending topic models such that the shot structure of video is taken into account, we show that a better coverage between topics and semantic categories can be achieved. Visual Concept Learning from Weakly Labeled Web Videos

Adrian Ulges, Damian Borth, Thomas Breuel, to appear in the Video Search and Mining (VSM) book by Springer-Verlag, 2009 Concept detection is an important component of video database search, concerned with the automatic recognition of visually diverse categories of objects ("airplane"), locations ("desert"), or activities ("interview"). The task poses a diffcult challenge as the amount of accurately labeled data available for supervised training is limited and coverage of concept classes is poor. In order to overcome these problems, we describe the use of videos found on the web as training data for concept detectors, using tagging and folksonomies as annotation sources. This permits us to scale up training to very large data sets and concept vocabularies. In order to take advantage of user-supplied tags on the web, we need to overcome problems of label weakness; web tags are content-dependent, unreliable and coarse. Our approach to addressing this problem is to automatically identify and filter non- relevant material. We demonstrate on a large database of videos retrieved from the web that this approach - called relevance filtering - leads to signiŽcant improvements over supervised learning techniques for categorization. In addition, we show how the approach can be combined with active learning to achieve additional performance improvements at moderate annotation cost Learning Automatic Concept Detectors from Online Video

Adrian Ulges, Christian Schulze, Markus Koch, Thomas Breuel, Computer Vision and Image Understanding, Elsevier, 2009 Concept detection is targeted at automatically labeling video content with semantic concepts appearing in it, like objects, locations, or activities. While concept detectors have become key components in many research prototypes for content-based video retrieval, their practical use is limited by the need for large-scale annotated training sets. To overcome this problem, we propose to train concept detectors on material downloaded from web-based video sharing portals like YouTube, such that training is based on tags given by users during upload, no manual annotation is required, and concept detection can scale up to thousands of concepts. On the downside, web video as training material is a complex domain, and the tags associated with it are weak and unreliable. Consequently, performance loss is to be expected when replacing high-quality state-of-the-art training sets with web video content. This paper presents a concept detection prototype named TubeTagger that utilizes YouTube content for an autonomous training. In quantitative experiments, we compare the performance when training on web video and on standard datasets from the literature. It is demonstrated that concept detection in web video is feasible, and that - when testing on YouTube videos - the YouTube-based detector outperforms the ones trained on standard training sets. By applying the YouTube-based prototype to datasets from the literature, we further demonstrate that: (1) If training annotations on the target domain are available, the resulting detectors significantly outperform the YouTube-based tagger. (2) If no annotations are available, the YouTube-based detector achieves comparable performance to the ones trained on standard datasets (moderate relative performance losses of 11.4% is measured) while offering the advantage of a fully automatic, scalable learning. (3) By enriching conventional training sets with online video material, performance improvements of 11.7% can be achieved when generalizing to domains unseen in training. TubeTagger - YouTube-based Concept Detection

Adrian Ulges, Markus Koch, Damian Borth, Thomas Breuel, ICDM Workshop on Internet Multimedia Mining, 2009 We present TubeTagger, a concept-based video retrieval system that exploits web video as an information source. The system performs a visual learning on YouTube clips (i.e., it trains detectors for semantic concepts like "soccer" or "windmill"), and a semantic learning on the associated tags (i.e., relations between concepts like "swimming" and "water" are discovered). This way, a text-based video search free of manual indexing is realized. We present a quantitative study on web-based concept detection comparing several features and statistical models on a large-scale dataset of YouTube content. Beyond this, we report several key Žndings related to concept learning from YouTube and its generalization to different domains, and illustrate certain characteristics of YouTube-learned concepts, like focus of interest and redundancy. To get a hands-on impression of web-based concept detection, we invite researchers and practitioners to test our web demo DFKI-IUPR participation in TRECVID'09 High-level Feature Extraction Task

Damian Borth, Markus Koch, Adrian Ulges, Thomas Breuel, TRECVID Workshop, 2009 Similar to our TRECVID participation in 2008, our main motivation in TRECVID'09 is to use web video as an alternative data source for training visual concept detectors. Web video material is publicly available at large quantities from portals like YouTube, and can form a noisy but large-scale and diverse basis for concept learning. Unfortunately, web-based concept detectors tend to be inaccurate when applied to different target domains (e.g., TRECVID data). This "domain change" problem is the focus of this year's TRECVID participation. We tackle it by introducing a highly-efficient linear discriminative approach, where a model is initially learned on a large dataset of YouTube video and then adapted to TRECVID data in a highly efficient online fashion. Results show that this cross-domain learning approach (infMAP 5.1%) (1) outperforms SVM detectors purely trained on YouTube (infMAP 3.2%), (2) performs as good as the linear discriminative approach trained directly on standard TRECVIDÕ09 development data (infMAP 5.1%), but (3) is outperformed by an SVM trained on standard TRECVIDÕ09 development data (infMAP of 8.5%). TubeFiler - an Automatic Web Video Categorizer

Damian Borth, Jörn Hees, Markus Koch, Adrian Ulges, Christian Schulze, Thomas Breuel, Roberto Paredes, ACM Multimedia, Grand Challenge 2009 While current web video platforms offer only limited support for a taxonomy-based browsing, hierarchies are powerful tools for organizing content in other application areas. To overcome this limitation, we present a framework called TubeFiler. Its two key features are an automatic multimodal categorization of videos into a genre hierarchy, and a support of additional Žne-grained hierarchy levels based on unsupervised learning. We present experimental results on real-world YouTube clips with a 2-level 46-category genre hierarchy, indicating that Š though the problem is clearly challenging Š good category suggestions can be achieved. For example, if TubeFiler suggests 5 categories, it hits the right one (or its supercategory) in 91.8% of cases. Detecting Pornographic Video Content by Combining Image Features with Motion Information

Christian Jansohn, Adrian Ulges, Thomas Breuel, ACM Multimedia, 2009. With the rise of large-scale digital video collections, the challenge of automatically detecting adult video content has gained significant impact with respect to applications such as content filtering or the detection of illegal material. While most systems represent videos with keyframes and then apply techniques well-known for static images, we investigate motion as another discriminative clue for pornography detection. A framework is presented that combines conventional keyframe-based methods with a statistical analysis of MPEG-4 motion vectors. Two general approaches are followed to describe motion patterns, one based on the detection of periodic motion and one on motion histograms. Our experiments on real-world web video data show that this combination with motion information improves the accuracy of pornography detection significantly (equal error is reduced from 9.9% to 6.0%). Comparing both motion descriptors, histograms outperform periodicity detection. Fast Discriminative Linear Models for Scalable Video Tagging

Roberto Paredes, Adrian Ulges, Thomas Breuel, ICMLA 2009 While video tagging (or "concept detection") is a key building block of research prototypes for video retrieval, its practical use is hindered by the computational effort associated with learning and detecting thousands of concepts. Support vector machines (SVMs), which can be considered the standard approach, scale poorly since the number of support vectors is usually high. In this paper, we propose a novel alternative that offers the benefits of rapid training and detection. This linear-discriminative method is based on the maximization of the area under the ROC. In quantitative experiments on a publicly available dataset of web videos, we demonstrate that this approach offers a significant speedup at a moderate performance loss compared to SVMs, and also outperforms another well-known linear-discriminative method based on a Passive-Aggressive Online Learning (PAMIR). Style Modeling for Tagging Personal Photo Collections

Mani Duan, Adrian Ulges, Thomas Breuel, X.-Q. Wu, CIVR 2009 While current image annotation methods treat each input image individually, users in practice tend to take multiple pictures at the same location, with the same setup, or over the same trip, such that the images to be labeled come in groups sharing a coherent "style". We present an approach for annotating such style-consistent batches of pictures. The method is inspired by previous work in handwriting recognition and models style as a latent random variable. For each style, a separate image annotation model is learned. When annotating a batch of images, style is inferred using maximum likelihood over the whole batch, and the style-specific model is used for an accurate tagging. In quantitative experiments on the COREL dataset and real-world photo stock downloaded from Flickr, we demonstrate that %style consistency helps image annotation to disambiguate and improves the overall tagging performance. - by making use of the additional information that images come in style-consistent groups - our approach outperforms several baselines that tag images individually. Relative performance improvements of up to 80% are achieved, and on the COREL-5K benchmark the proposed method gives a mean recall/precision of 39/25%, which is the best result reported to date. Video Copy Detection providing Localized Matches

Damian Borth, Adrian Ulges, Christian Schulze, Thomas M. Breuel, Informatiktage 2009 With the availability of large scale online video platforms like YouTube, copyright infringement becomes a severe problem, such that the demand for robust copy detection systems is growing. Such system must Žnd multiple occurrence of copyright protected material within video clips that are created, modiŽed, remixed and uploaded by the user. A particular challenge is to Žnd the exact position of a copy in a Š potentially huge Š reference database. For this purpose, this paper presents a Content Based Copy Detection system that both detects copies in query videos against a reference database and gives an exact alignment between them. For Žnding and aligning a matching shot, a fast search for candidates is conducted, and as a second step an exact alignment is found using a dynamic programming minimization of the well-known edit distance from text retrieval. The introduced approach was evaluated on the public available MUSCLE-VCD-2007 data corpus and showed competitive alignment results compared to the ACM CIVR 2007 evaluation Learning TRECVID'08 High-Level Features from YouTube

Adrian Ulges, Markus Koch, Christian Schulze, Thomas Breuel, TRECVID Workshop 2008 We participated in TRECVID's High-level Features task to investigate online video as an alternative data source for concept detector training. Such video material is publicly available in large quantities from video portals like YouTube. In our setup, tags provided by users during upload serve as weak ground truth labels, and training can scale up to thousands of concepts without manual annotation effort. On the downside, online video as a domain is complex, and the labels associated with it are coarse and unreliable, such that performance loss can be expected compared to high-quality standard training sets. To find out if it is possible to train concept detectors on online video, our TRECVID experiments compare the same state-of-the-art (visual only) concept detection systems when (1) training on the standard TRECVID development data and (2) training on clips downloaded from YouTube. Our key observation is that youtube-based detectors work well for some concepts, but are overall significantly outperformed by the "specialized" systems trained on standard TRECVID'08 data (giving a infMAP of 2.2% and 2.1% compared to 5.3% and 6.1%). An in-depth analysis of the results shows that a major reason for this seems to be redundancy in the TV08 dataset. Multiple Instance Learning from Weakly Labeled Videos

Adrian Ulges, Christian Schulze, Thomas Breuel, SAMT Workshop 2008 Automatic video tagging systems are targeted at assigning semantic concepts ("tags") to videos by linking textual descriptions with the audio-visual video content. To train such systems, we investigate online video from portals such as youtube as a large-scale, freely available knowledge source. Tags pro- vided by video owners serve as weak annotations indicating that a target concept appears in a video, but not when it appears. This situation resembles the multiple instance learning (MIL) scenario, in which classifiers are trained on labeled bags (videos) of unlabeled samples (the frames of a video). We study MIL in quantitative, large-scale experiments on real-world online videos. Our key findings are: (1) conventional MIL tends to neglect valuable information in the training data and thus performs poorly. (2) By relaxing the MIL assumption, a tagging system can be built that performs comparable or better than its supervised counterpart. (3) Improvements by MIL are minor compared to a kernel-based model we proposed recently. Identifying Relevant Frames in Weakly Labeled Videos for Training Concept Detectors

Adrian Ulges, Christian Schulze, Daniel Keysers, Thomas M. Breuel, CIVR 2008 A key problem with the automatic detection of semantic concepts (like "interview" or "soccer") in video streams is the manual acquisition of adequate training sets. Recently, we have proposed to use online videos downloaded from portals like youtube.com for this purpose, whereas tags provided by users during video upload serve as ground truth annotations. The problem with such training data is that it is weakly labeled: Annotations are only provided on video level, and many shots of a video may be "non-relevant", i.e. not visu- ally related to a tag. In this paper, we present a probabilistic framework for learning from such weakly annotated training videos in the presence of irrelevant content. Thereby, the rel- evance of keyframes is modeled as a latent random variable that is estimated during training. In quantitative experiments on real-world online videos and TV news data, we demonstrate that the proposed model leads to a significantly increased robustness with respect to irrelevant content, and to a better generalization of the re- sulting concept detectors. Navidgator - Similarity Based Browsing for Image & Video Databases

Damian Borth, Christian Schulze, Adrian Ulges, Thomas M. Breuel, KI-2008 A main problem with the handling of multimedia databases is the navigation through and the search within the content of a database. The problem arises from the difference between the possible textual description (annotation) of the database content and its visual appearance. Overcoming the so called - semantic gap - has been in the focus of research for some time. This paper presents a new system for similarity-based browsing of multimedia databases. The system aims at decreasing the semantic gap by using a tree structure, built up on balanced hierarchical clustering. Using this approach, operators are provided with an intuitive and easy-to-use browsing tool. An important objective of this paper is not only on the description of the database organization and retrieval structure, but also how the illustrated techniques might be integrated into a single system. Our main contribution is the direct use of a balanced tree structure for navigating through the database of keyframes, paired with an easy-to-use interface, offering a coarse to fine similarity-based view of the grouped database content. Keyframe Extraction for Video Tagging & Summarization

Damian Borth, Adrian Ulges, Christian Schulze, Thomas M. Breuel, Informatiktage 2008 Currently, online video distributed via online video platforms like YouTube experiences more and more popularity. We propose an approach of keyframe extraction based on unsupervised learning for video retrieval and video summarization. Our approach uses shot boundary detection to segment the video into shots and the k-means algorithm to determine cluster representatives for each shot that are used as keyframes. Furthermore we performed an additional clustering on the extracted keyframes to provide a video summarization. To test our methods we used a database of videos downloaded from YouTube where our results show (1) an improvement of retrieval and (2) compact summarization examples. A System that Learns to Tag Videos by Watching Youtube

Adrian Ulges, Christian Schulze, Daniel Keysers, Thomas M. Breuel, ICVS 2008 We present a system that automatically tags videos, i.e. detects high-level semantic concepts like objects or actions in them. To do so, our system does not rely on datasets manually annotated for re- search purposes. Instead, we propose to use videos from online portals like youtube.com as a novel source of training data, whereas tags provided by users during upload serve as ground truth annotations. This allows our system to learn autonomously by automatically downloading its training set. The key contribution of this work is a number of large-scale quantitative experiments on real-world online videos, in which we investigate the influence of the individual system components, and how well our tagger generalizes to novel content. Our key results are: (1) Fair tagging results can be obtained by a late fusion of several kinds of visual features. (2) Using more than one keyframe per shot is helpful. (3) To generalize to different video content (e.g., another video portal), the system can be adapted by expanding its training set. Content-Based Video Tagging for Online Video Portals

Adrian Ulges, Christian Schulze, Daniel Keysers, Thomas M. Breuel, MUSCLE/ImageClef Workshop 2007. Despite the increasing economic impact of the online video market, search in commercial video databases is still mostly based on user-generated meta-data. To complement this manual labeling, recent research efforts have investigated the interpretation of the visual content of a video to automatically annotate it. A key problem with such methods is the costly acquisition of a manually annotated training set. In this paper, we study whether content-based tagging can be learned from user-tagged online video, a vast, public data source. We present an extensive benchmark using a database of real-world videos from the video portal youtube.com. We show that a combination of several visual features improves performance over our baseline system by about 30%. |